|

How to Load More Than One Event Log |

Scroll Previous Topic Top Next Topic More |

This “how to” explains how to

•load several event logs into one app,

•generate several process perspectives on one event log and

•create a data model with these different event logs.

To understand the following instructions, we assume that you are already familiar with “Deploy the MPM Template App” and the “MPM Data Model”.

Loading more than one event log or several different views on one event log into the app will allow you to analyze the process form different perspectives like activity-based perspective, resource-perspective, organizational-perspective or control-flow-perspective.

The explanation will be illustrated by taking the Helpdesk example. The aim in the example is to create not only the activity-based perspective, but also a user-based perspective. The activity-based perspective will be the main event log on which every analysis in the MPM Template App will be available. The user-based perspective will only be an additional view, for which some analysis will be disabled.

1.Open the MPM Template App that you’ve already filled by following the steps in “Deploy the MPM Template App”.

2.Open the script editor.

3.Create a new tab for the new process perspective.

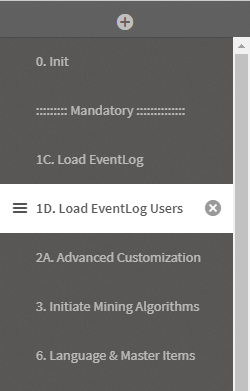

a)Copy the content of tab "1C. Load EventLog" to the new tab.

b)Delete the parts of "SETTING CASE CONTEXT MASTER DIMENSIONS" from the new tab - this is only for the "main" event log.

c)Name the tab like the process perspective you will create, e.g. "1.D Load EventLog Users".

d)Drag the new tab after the tab "0. Init".

Example

4.Change the definition of the table EventLog according to the new process perspective you would like to create or load another EventLog here, as you have done before when preparing the MPM Template App.

Example

We would like to create a user-centric analysis. Therefore, we change the definition of the fields ActivityType and ActivityTypeID from formerly "ActivityName" to "User". We do this by simply replacing "ActivityName" by "User" for the fields ActivityType, ActivityTypeID and in the where filter. For data modeling reasons, we decided to keep the "User" in an extra field "User" and the "ActivityName" in an extra field "ActivityName".

|

Do not load event information with the same fieldname in the two EventLogs. Notice, that in the example we deleted the field RealUser - it would have created a circular reference or synthetic key. |

Before: Activity-perspective

|

EventLog: //Log table name: "EventLog" LOAD //ProcessAnalyzer information CaseID, "ActivityName" as ActivityType, AutoNumber(ActivityName) + 100 as ActivityTypeID, Timestamp(ActivityStartTimestamp) as ActivityStartTimestamp, Timestamp(ActivityEndTimestamp) as ActivityEndTimestamp, //Event information //Here you can add other event-related information (dimensions and values) "UserName" as ActivityUserName, if(WildMatch("UserName", 'batch'), 0, 1) as RealUser //delete if no user available FROM [$(DataConnection)/MPMData.qvd] (qvd) where len(ActivityName) > 1;

|

After: User-Perspective

|

EventLog: //Log table name: "EventLog" LOAD //ProcessAnalyzer information CaseID, "User" as ActivityType, AutoNumber("User") + 100 as ActivityTypeID, Timestamp(ActivityStartTimestamp) as ActivityStartTimestamp, Timestamp(ActivityEndTimestamp) as ActivityEndTimestamp, //Event information //Here you can add other event-related information (dimensions and values) "ActivityName" as ActivityName, "User" as User FROM [$(DataConnection)/MPMData.qvd] (qvd) where len(UserName) > 1; |

5.Once the EventLog table is defined, fill in or delete the section where you define activity groups and custom lead times for the new process perspective. How to define activity groups, please refer to Define Activity Groups for Hierarchical Mining. How to define custom lead times, please refer to Define Subprocesses in the MPM EventLogGeneration Apps.

a)If you use grouping or hierarchies, please name the two tables uniquely. In our example we would name them "Groups_Users" and "Subprocesses_Users". Thus, you prevent inexpected behaviour like table concats.

6.If you are interested in deriving information on unfinished cases for this process perspective, define the variable "LET mvProcessFinished" - otherwise delete it.

7.Go to the tab "3. Initiate Mining Algorithms" and copy the method call "mw_pa_initMining" below the EventLog you are loading. Replace the first parameter with the name of the process perspective you are creating. Adapt the third and the last parameter if you don't use grouping or custom lead times.

Example

// :::::::::::::::::::::::::::::::::::::::: CALLING THE MPM-ALGORITHM ::::::::::::::::::::::::::::::::::::::: //

// Here the MPM-Algorithm is called.

// Please insert four parameters as in the example below.

// First parameter: scenario name

// Second parameter: log table name

// Third parameter: Grouping table name (leave empty '' if no grouping table is provided)

// Fourth parameter: Data connection where you would like to save the (temporary) .qvd-files to

// EXAMPLE: call mv_pa_initMining ('ScenarioName','EventLogTableName','GroupingTableName','FolderName', 'StoreQVDs');

// 1st parameter , 2nd parameter , 3rd parameter, 4th parameter, 5th parameter

call mw_pa_initMining('Users','EventLog','','$(DataConnection)','$(mvStoreMPMModelQVD)','');

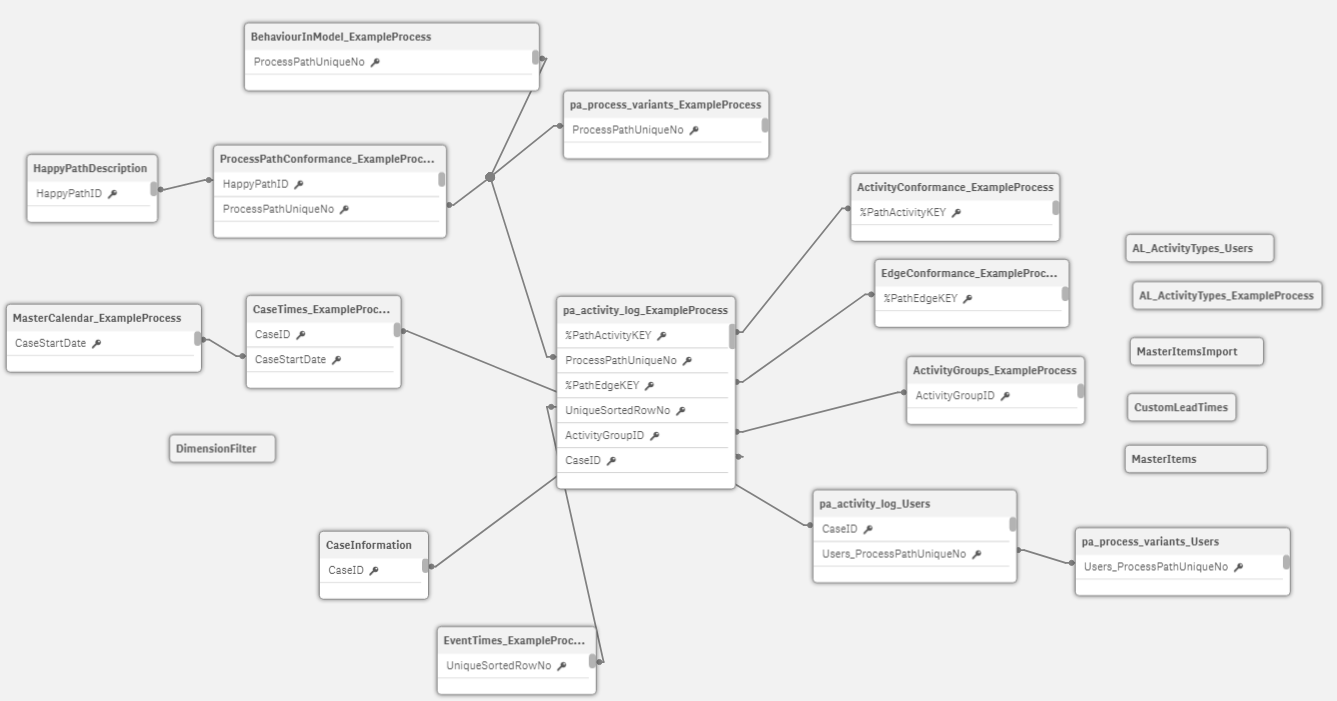

8.Qualify the MPM Data Model created. This is necessary, to not form circular references, synthetic keys or to concatenate the second MPM Data Model with the central process perspective.

a)Call the MPM qualify method for each table of the MPM Data Model that you would like to keep. In the example we will keep the pa_activity_log, the pa_process_variants and the AL_ActivityTypes.

|

The three tables are the minimum for functional usage of the MPM extensions. |

To call the qualify method, perform the following steps:

1.call the qualify method after the method mw_pa_initMining.

2.Enter the table name (which ends with the first parameter you put into mw_pa_initMining).

3.Enter the first parameter you put into "mw_pa_initMining" again as qualifier.

Example

call MW_qualify_FieldNames ('pa_activity_log_Users', 'Users');

call MW_qualify_FieldNames ('pa_process_variants_Users', 'Users');

call MW_qualify_FieldNames ('AL_ActivityTypes_Users', 'Users');

b)By qualifying the logs, you have lost the keys between the tables pa_acitivity_log and pa_process_variant. To restore them, do the following step for your data set:

rename field Users_Users_ProcessPathUniqueNo to Users_ProcessPathUniqueNo;

c)Also, you will need to create a key between the two process perspectives which is in our example the CaseID.

Example

left join (pa_activity_log_Users)

load Users_CaseID, Users_CaseID as CaseID

resident pa_activity_log_Users;

d)Qualify the tables for ActivityGroups and Subprocesses if you have defined them previously.

9.Drop all tables that you have not qualified in step 8. In our example, we will need to drop the tables CaseTimes and EventTimes.

10.Fill the rest of the MPM Template App script as described in Deploy the MPM Template App if you have not done it so far.

11.Press reload data. Your data model should be similar to our example: